Main menu

You are here

Data Storage Solution: Hardware & OS (Part 2 of 3)

Overview

This entry covers the technical details of the implementation.

I approached this by breaking down the stack into individual steps and then I conduct performance, security and data management reviews.

The Physical Stack

- Storage Devices

- Storage Controller

- Server

- Network

- Site

- User

Storage Devices

smartctl -i <dev>

100% SSD solution. I provide a separate HDD for boot (1x512GB Samsung 850 EVO) and an array (2x1TB Samsung 850 EVO) for storage. This division is mostly historical but it saves me the trouble of setting up a bootable partition on the raid array.

I could not afford >2 discs or >1TB so I will have to make due with a 1TB RAID1 mirror. This is about 3x larger than the existing array so I expect this to give me a 3yr buffer until larger capacity SSD are available.

Storage Controller

lspci

This Intel bridge provides 5x3Gbps and 1x6Gbps SATA ports.

The boot drive is attached to the 6G port and the storage array (as well as a DVD drive) are attached to the 3G ports. 3G is more than sufficient for any application including streaming video and the 6G port should provide optimal boot times, desktop latency, app loading, and server response.

Server

lscpu

This is an Intel(R) Core(TM) i5-3570 CPU @ 3.40GHz. This provides 4x 64bit cores, 1 thread per core. I've installed 4GB DDR3. I find that this is more than sufficient for any task except for processing audio and video. It does well in these applications for all but the impatient.

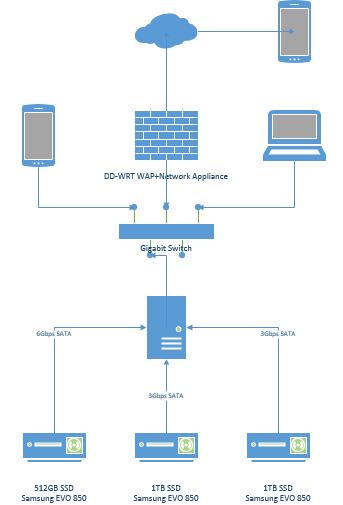

Network

The network is a gigabit switch and a DD-WRT firewall which also acts as an access point and a gateway. All connections on the network are ethernet except for laptops and smartphones. All servers are firewalled and the gateway is also firewalled.

Site

This is my physical residence so this is subject to power outages, home breakins, busted water pipes, things of this nature.

User

This is primarily for myself and those I choose to share my data with.

The Logical Stack

- Partitioning

- File System

- Raid Controller

- IO Scheduler

- Operating System

- Apps/Services

The Design

Partitioning

The boot drive contains the root partition which stores the operating system, a swap partition and a scratch partition.

The scratch partition is a working space for project experiments and in no way contains data that should be preserved.

A typical root partition holds user data in /home and /var so this will need preservation. As a result the array contains a 20GB partition for /home, /var and the remainder dedicated to data storage. For details see Data Management.

fdisk -l <dev>:

Boot Drive Partitioning (/dev/sda):

Disk /dev/sda: 465.8 GiB, 500107862016 bytes, 976773168 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disklabel type: dos Disk identifier: 0x000ee05f Device Boot Start End Sectors Size Id Type /dev/sda1 * 2048 41965567 41963520 20G 83 Linux /dev/sda2 41965568 50354175 8388608 4G 82 Linux swap / Solaris /dev/sda3 50354176 976773167 926418992 441.8G 83 Linux

Storage Array Partitioning (/dev/sd{b,c}):

Disk /dev/sdb: 931.5 GiB, 1000204886016 bytes, 1953525168 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disklabel type: gpt Disk identifier: 23ABB100-66E6-4A0A-B0A4-E7560756A29A Device Start End Sectors Size Type /dev/sdb1 2048 39063551 39061504 18.6G Linux filesystem /dev/sdb2 39063552 78125055 39061504 18.6G Linux filesystem /dev/sdb3 78125056 1953525134 1875400079 894.3G Linux filesystem

EXT4 File System

tune2fs -l <dev> to view filesystem options and configuration

stat -f mount_point to check block size and block and inode summary

dumpe2fs /path/to/mount to check fs configuration

https://man7.org/linux/man-pages/man5/ext4.5.html

EXT4 is one of the most mature, yet state-of-the-art file systems available. It provides the most consistent and still exceptional performance over many workloads, platforms and configurations. It also reserves some portion of the drive for root user in situations where the drive is full and you are trying to recover. It also supports TRIM and is compatible with SSD. For these reasons I chose EXT4 for the root partition.

There are a few specific EXT4 optimizations:

- Set Maximum mount count with tune2fs -c 100

- Set Check interval with tune2fs -i 6m

- Journal size default is 128MB. not much info if changing this matters

- Block size (for data): 4096 is a good value for most filesystems (and matches kernel page size), larger does not appear to be stable (bigalloc) on ext4. Also must consider the block size of the physical media: must be <= PAGESIZE on linux. 4096 bytes for a modern compute environment is supposed to provide a good balance of performance and disc use efficiency (for files < 4096bytes in size)

- Set Reserved block count should be 1% or 1GB whichever is smaller: tune2fs -m 1 mount_point

- Inode design: default inode size should be 256bytes on EXT4. Choose total inode quantity such that it is twice your expected file count for that file system.

- otherwise ext4 provides good default settings

EXT4 by default chooses a block size of 4096bytes. 4096 is also an even multiple of 512 the HDD block size so alignment is achieved.

mkfs.ext4 -m 1 -b 4096 -i 32768 /dev/sda1 tune2fs -c 100 /dev/sda1 tune2fs -i 1m /dev/sda1

XFS File System

xfs_info mount_point for filesystem configuration

https://man.archlinux.org/man/mkfs.xfs.8

For the remainder of partitions data tends to be read/write in large chunks or many small files. So I looked for a fs that is known for its performance and scalability. It must also play nice with SSD. Any optimization for large transfers that may adversely affect smaller bits of data is made up for the fact that the hardware is so fast. I looked at JFS, XFS and at F2FS. F2FS is available in Debian but uncommon on servers. They are all quite interesting, JFS is great but XFS is well known for its performance and scalability so I chose XFS for the remainder of the partitions.

There are a few specific XFS optimizations:

- use v5 partitions; this is the default for mkfs.xfs options enable all the required features

- block size: follow size of media; must be <= PAGESIZE on linux; and respect the linux page size

- allocation groups: minimum of 8, increase for high io drives

- sunit,swidth: set to match your RAID hardware in order to align the block and stripe sizes with the partitions and on disk block

- inode quantity: leave inode size the same, but reduce inode allocation to 5% for smaller drives and 1% for larger drives. The defaults are 25% and 5% respectively which is ginormous.

- cannot find much info on impact of log size

- otherwise xfs provides good default settings

For the /var and /home partitions XFS chooses a default block size of 4096bytes with no striping.

For the media storage partition I wanted a larger block size to reflect the files that will be stored there (ie almost always >1MB, usually >10MB and sometimes >1GB in size). Therefore I configured the partition to use 64kB blocks and told XFS to optimize for a 2 disk RAID1 mirror.

mkfs.xfs -f -d agcount=8,sw=1,su=512k -i maxpct=1 /dev/md0 mkfs.xfs -f -d agcount=8,sw=1,su=512k -i maxpct=1 /dev/md1 mkfs.xfs -f -d agcount=8,sw=1,su=512k -i maxpct=1 /dev/md2 mkfs.xfs -f -d agcount=8 -i maxpct=1 /dev/sda3

Raid Controller

cat /proc/mdstat

mdadm --detail dev_device

mdadm --detail --scan (for creating a new /etc/mdadm/mdadm.conf)

https://wiki.archlinux.org/title/RAID

https://raid.wiki.kernel.org/index.php/RAID_setup

This implementation uses software raid. I have always avoided motherboard based / Intel RAID solutions for fear of portability issues and lack of restoration options. So I will continue to use mdadm.

| Device | Options | Metadata |

| /dev/md0 | default | v1.2 |

| /dev/md1 | default | v1.2 |

| /dev/md2 | default,64k chunk,bitmap | v1.2 |

There are a few specific mdadm optimizations:

- stripe size: generally means the number of disks in the array

- chunk size: a multiple (or power of 2) of the linux block size (which is 4096)

- check your file systems for compatibility with mdadm. Typically they require specific commands to be optimized and aligned with mdadm (e.g. stripe size)

Command Summary:

mdadm --create --verbose /dev/md0 --level=mirror --raid-devices=2 /dev/sdb1 /dev/sdc1

mdadm --create --verbose /dev/md1 --level=mirror --raid-devices=2 /dev/sdb2 /dev/sdc2

mdadm --create --verbose --chunk=512k /dev/md2 --level=mirror --raid-devices=2 /dev/sdb3 /dev/sdc3

mdadm --detail --scan /dev/md{0,1,2} >> /etc/mdadm/mdadm.conf

cat /proc/mdstat

mdstat --detail /dev/md{1,2,3}

/proc/mdstat:

Personalities : [raid1] [linear] [multipath] [raid0] [raid6] [raid5] [raid4] [raid10]

md1 : active raid1 sdc2[1] sdb2[0]

19514368 blocks super 1.2 [2/2] [UU]

md2 : active raid1 sdc3[1] sdb3[0]

937568960 blocks super 1.2 [2/2] [UU]

bitmap: 0/7 pages [0KB], 65536KB chunk

md0 : active raid1 sdc1[1] sdb1[0]

19514368 blocks super 1.2 [2/2] [UU]

unused devices:

Mounting Options

Because both filesystems contain in their superblocks most configuration options there is not much to fstab except telling the operating system of their existence. Perhaps more useful, here is a list of the mount points.

Mount Options Summary:

| Device | Mount Point | Mount Options |

| UUID | / | relatime,errors=remount-ro |

| UUID | /mnt/scratch | defaults,logbsize=256k,relatime |

| /dev/md0 | /var | defaults,logbsize=256k,relatime |

| /dev/md1 | /home | defaults,logbsize=256k,relatime |

| /dev/md2 | /mnt/files | defaults,logbsize=256k,relatime |

# /etc/fstab: static file system information. # # proc /proc proc defaults 0 0 UUID=3f227b45-acd3-478a-bccd-d700143e9951 / ext4 relatime,errors=remount-ro 0 1 UUID=d0ad06f1-7996-4f77-97a7-ad6d4d772547 /mnt/scratch xfs defaults,logbsize=256k,relatime 0 2 UUID=bc65b7d4-69e3-4ec2-8593-22fd219b133b none swap sw 0 0 UUID=b07525a3-b1c4-4b6c-84b9-92f95b41aeef /var xfs defaults,logbsize=256k,relatime 0 2 UUID=4a11ac93-87a3-45ed-815a-79a0d2369e21 /home xfs defaults,logbsize=256k,relatime 0 2 UUID=83f8576f-e261-4e0c-8645-82c2e5437bfc /mnt/files xfs defaults,logbsize=256k,relatime 0 2 /dev/sr0 /media/cdrom0 udf,iso9660 user,noauto 0 0

The relatime feature is a write optimization that eliminates a filesystem feature that is rarely (if ever) used by the OS or apps and can significantly reduce the number of small writes to a drive thereby increasing performance and lifespan of the drive.

Disk Organization Summary

| Device | Start | End | Type | Mount Point | dev | Size | FS |

| sda1 | 2048 | 41965567 | Linux | / | /dev/sda1 | 20.0GB | ext4 |

| sda3 | 50354176 | 976773167 | Linux | /mnt/scratch | /dev/sda3 | 441.8GB | xfs |

| sd{b,c}1 | 2048 | 39063551 | Linux | /var | /dev/md0 | 18.6GB | xfs |

| sd{b,c}2 | 39063552 | 78125055 | Linux | /home | /dev/md1 | 18.6GB | xfs |

| sd{b,c}3 | 78125056 | 1953525134 | Linux | files | /dev/md2 | 894.3GB | xfs |

IO scheduler

The deadline scheduler is known provide best performance on XFS and from the various tests I've seen online it provides a small benefit or at least does no harm on EXT4. The deadline scheduler guarantees minimum latency.

- deadline is best performance and lowest latency.

Add block/sda/queue/scheduler = deadline to sysfs Add block/sdb/queue/scheduler = deadline to sysfs Add block/sdc/queue/scheduler = deadline to sysfs

Kernel Tuning

Persistent Access

/etc/sysctl.d/* /etc/sysfs.d/*

Realtime

/proc/sys/vm/* /sys/*

Tuning Parameters

- Process Scheduling: tbd

- CPU (frequency scaling/perf): tbd

- Physical Memory: tbd

- VM (swap/oom behavior: swapiness is reduced to minimum value, maximizing RAM usage

Add vm.swapiness=1 to sysctl

- VM (caching, buffering, etc): tbd

- IO Scheduling: tbd

- IO Bus (SATA): tbd

- IO Bus (PCIe): tbd

- IO Bus (DDR): tbd

- Disk: tbd, https://www.linux-magazine.com/Online/Features/Tune-Your-Hard-Disk-with-...

- Block: tbd

- Notes: kernel tuning may also require writing (NVRAM) to BIOS, controllers or disks

Operating System

The operating system is Debian "Buster" 10.x.

File system Permissions:

- /var and /home inherit distro (LSB) permissions

- /mnt/files has all files and directories owned by root.backup. All users have read access except for a secure backup folder. Depending on the application the backup group will have read-only or read-write access.

- A nightly cron job searches for "out of spec" files and directories in the storage array and corrects them.

Apps

There are several types of apps that make use of this data:

- Dolphin (and desktop apps)

- Baloo (desktop search)

- Media apps

- System Monitoring

Dolphin (and desktop apps)

Not much needs to be changed. Apps will work out of box. But tweaking dolphin can bring you to your data quicker:

- Set up shortcuts to Scratch, Storage and Projects

- Enable full path inside location bar

- Use common properties for all folders

- Set up Trash: 90days/10%

Baloo (desktop search)

This is KDE's semantic search. The default settings will attempt to archive your entire disk which is incredibly wasteful both in disk size and activity but also tends to make your search results full of noise.

- Make sure to Enable Desktop Search in KDE System Settings->Desktop Search.

- Add "Do Not Search" exemptions in KDE System Settings->Desktop Search.

Unfortunately baloo uses a blacklist and I want a whitelist. The only folders I want baloo to index are:

- /home

- /media

- /mnt (not including rdiff backups)

This results in approximately 75k indexed files available for search.

Media apps

Amarok is my primary Linux desktop app. The primary media server is Plex.

System Monitoring

The goal is to enable monitoring and reporting at all levels of the stack.

| Item | Monitor | Notes |

| Storage Devices | smartmontools, Munin, lm-sensors | The hdd are smart enabled and I use Munin to track usage, temperature and other S.M.A.R.T. metrics. I set alarms for critical items. |

| Partitions | backupninja | Tool to backup partition information nightly |

| File System | Munin | Capacity and integrity tracking. |

| Operating System/Server | sysstat, Munin, logcheck, lm-sensors, top, iotop | Environmental, load, performance (e.g. fragmentation) and system metrics. Logcheck monitors logs for abnormal events, RAID failures, alarms, etc. Does systemd automatically check integrity and fragmentation? |

| IO scheduler | NA | Not needed |

| Network | firewall | Firewall logs abnormal events and an IDS looks for attack signatures |

| Site | NA | Not needed/possible |

| Apps | Munin | Most services are tracked with Munin. However baloo and service pinging or network pinging are not enabled. |

| User | Munin | Munin tracks load status |

Notes on upgrading the OS

- Review kernel and filesystem support tool changelogs for fs changes

- review changes to mdadm

- some times you can enable new features

- other times you have to recreate the filesystem

Notes on partition copying

More googling revealed that rsync is a simple to use and extremely effective disc copy utility in situations where partition size or file system are different.

The command I used for copying partitions was as follows. The first performs the actual copy and the second doublechecks.

rsync -avxHAWX --info=progress2 > ~/rsync.out rsync -avxHAWX --info=progress2

Notes on replacing a partition

- backup partition (or backup twice)

- wipe partition (any method)

- create new partition (if necessary)

- create mdadm array

- create new filesystem

- restore data

- verify data

- edit /etc/fstab mount options

- edit /etc/mdadm/mdadm.conf persistent array options

- update initramfs (to know about new UUID ow it will automap them)

Partition Identification:

/sbin/blkid to show blk device UUID,labels and types /sbin/lsblk to show disks, partitions, maj:min, ro vs rw, removable vs fixed, size, type and mountpoint

Preserving a Partition:

cp -aR --preserve=all /home.old/* /home xfs_dump/xfs_restore

Notes on the boot loader

- continue to use GRUB2

- use GPT when possible (my old mb may not support it so boot partition is old skool mbr atm)

- updating the bootloader:

update initramfs: update-initramfs -u -k all

- Log in to post comments